M. Ducceschi and S. Bilbao

Simulation of the Geometrically Exact Nonlinear String via Energy Quadratisation

Journal of Sound and Vibration, 2022

Nonlinear string vibration has been the subject of intense reasearch. Various musically relevant phenomena cannot be explained by linear theory alone: these include pitch glides and tension modulation effects. Geometric arguments and Hooke's law can be used to derive a nonlinear model, known in the literature as geometrically exact [1].

This model predicts an energy transfer (that is, a coupling) between the transverse and longitudinal waves. Numerically, this system is very complicated! (... and, from my perspective, a lot of fun to work with ...). Complications arise for two reasons. The first has to do with the linear parts of the longitudinal and transverse waves: these have very different wave speeds, ultimately impairing good numerical resolution. If you try to match the mesh size to either one of the wave speeds, you'll get severe warping in the other direction. The second difficulty arises as a consequence of classic time-stepping integrators, yielding an energy-conserving discretisation of the gradients on one hand, but a fully nonlinearly implicit discretisation on the other. This is a massive computational bottleneck!

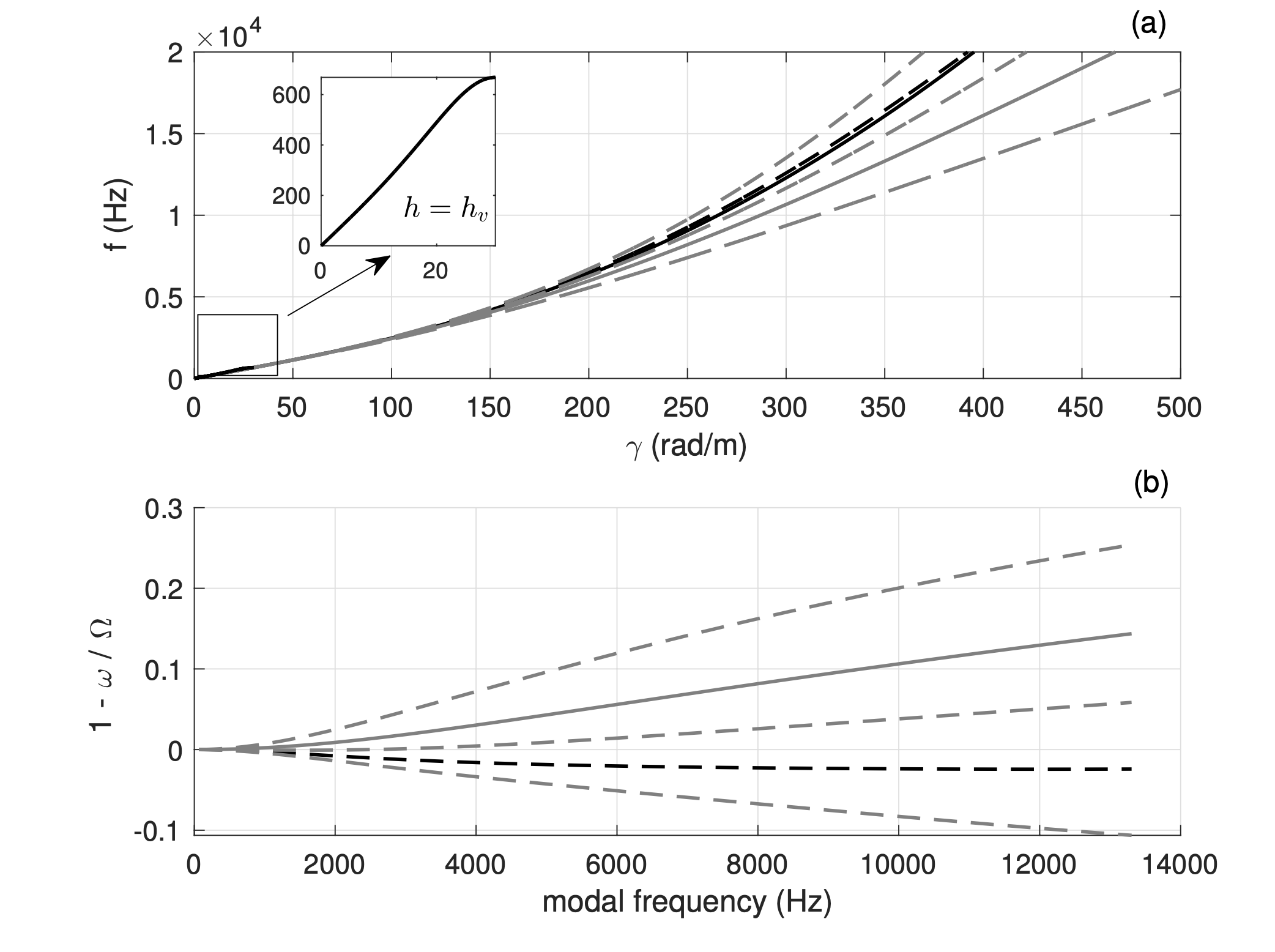

Stefan and I had a go with the problem. As a general design principle, it is always best to discretise the linear part as good as possible, that is, to make sure that the linear part runs with least amount of numerical dispersion possible with the sample rate available (for audio, say, 48 kHz or so). For the geometrically exact string, this takes a little tinkering, but we were able to discretise the transverse waves using a finite difference scheme, and the longitudinal waves using some kind of modal approach. Since, for audio, the sample rate (and hence, the time step), is used as input parameter, the grid spacing for the transverse difference scheme is chosen at the limit of stability, while the number of longitudinal modes can be selected so to match the number of actual eigenmodes of the continuous system, avoiding modal warping. The use of a free ``theta'' parameter, allows to match the numercal linear part of the transverse waves to the continuous model very, very closely.

CFL or not?

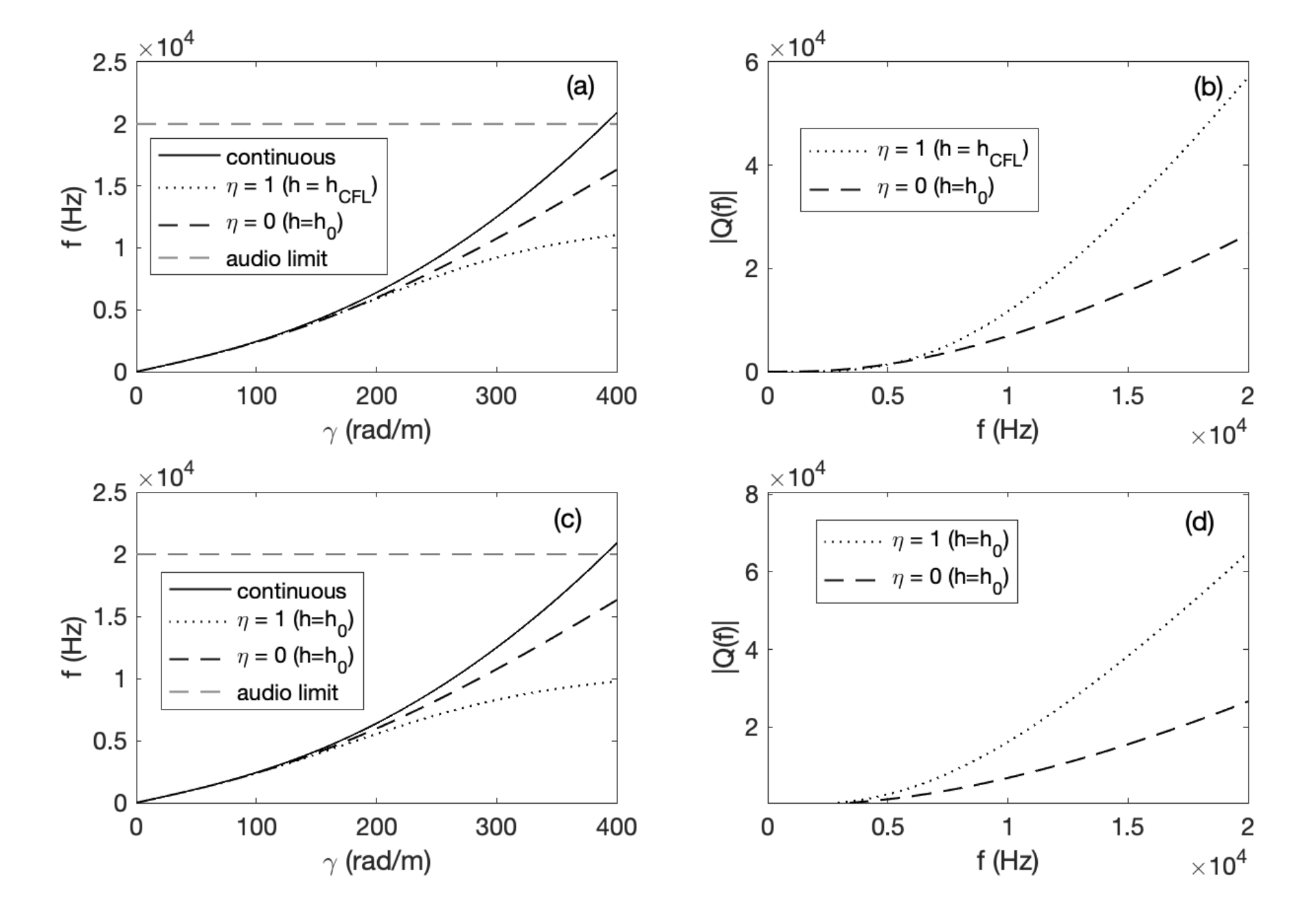

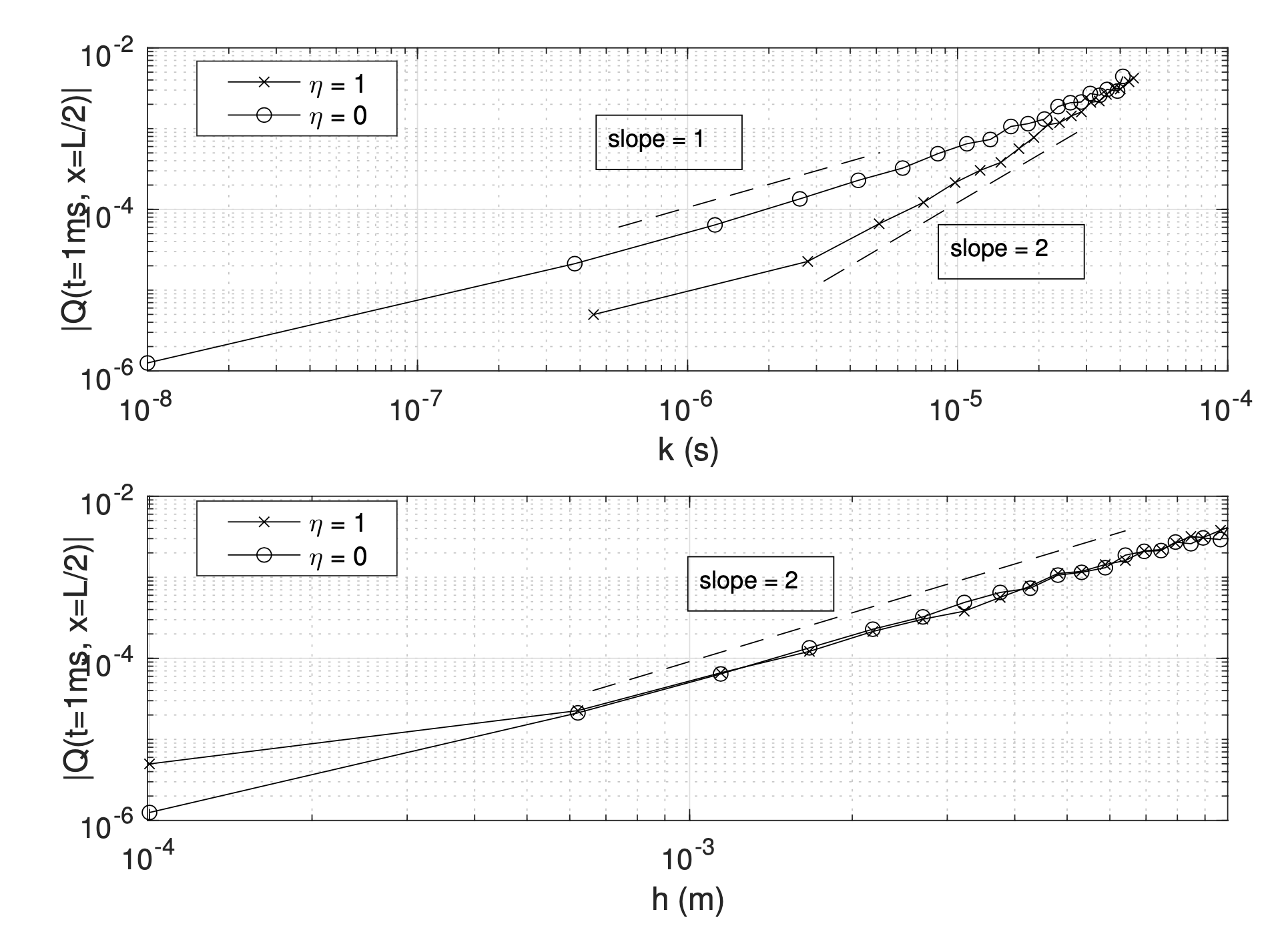

The finite difference scheme for the transverse waves is characterised by a stability condition which depends on a switch \(\eta \in \{0,1\}\). When \(\eta = 0\), the fourth-order differential operator is discretised explicitly. This has consequences on the stability, convergence and accuracy properties of the scheme overall. When \(\eta = 0\), \(k\) is not simply proportional to the grid spacing \(h\). In fact, here \(k = O(h^2)\), as opposed to a classic Courant–Friedrichs–Lewy (CFL) condition, obtained for \(\eta = 1\), and which reads \(h = c k\), where \(c\) is the wave speed.

As a consequence, the formal order of accuracy in time of the scheme with \(\eta = 0\) is only first-order! Is this scheme somewhat "less accurate" than a scheme with a pure CFL condition? This question can be addressed in a number of ways. Formal order-accuracy is, of course, one way of assessing the scheme's properties. But what happens if you look at the behaviour of the scheme in the frequency domain?

Usually, in audio, one selects the sample rate and chooses the grid spacing at the limit of stability. So, if you compare the two schemes on this basis, the implicit scheme looks kind of clumsy in the frequency domain!

The figure above shows that the wideband properties of the schemes do not scale with order-accuracy. In other words, the scheme with \(\eta = 1\) is way more accurate at lower frequencies, but numerical dispersion quickly gets worse at higher frequencies. For audio, this is not good, since a detuning of higher partials results in a string sounding ``flat''.

Okay, but what if you keep the grid spacing equal for both schemes, and select the time step at the limit of stability? Well, here the scheme with \(\eta = 0\) clearly shows first-order accuracy ... but, for the same mesh size, the corresponding sample rate for this scheme is much larger, at the limit of stability, and this allows to compensate for the formal first-order accuracy ... in fact, when plotted against the mesh size \(h\), the space-time errors for the two schemes are basically equal! Also, while the sample rate at the limit of stability is much lower for the scheme with \(\eta = 1\), the scheme is \(\eta = 0\) is nonetheless more efficient: the pentadiagonal system of the implicit scheme is a lot harder to do, than simple division ...

Quadratising the nonlinear energy

Now that the linear parts are sorted, we can have a look at the nonlinearity. As mentioned earlier, in classic time-stepping integrators, this is a real pain: on one hand, ensuring stability (that is, energy conservation) cannot be done using explicit designs. On the other hand, fully-implicit schemes result in huge algebraic nonlinear systems to be solved at each time step. This has a lot of side problems: you have to show existence and uniqueness of the numerical update; you need to decide on a nonlinear root finder (usually, Newton-Raphson), and you have to make choices regarding tolerance thresholds, halt conditions, ...

I have been investigating the possibility of alternative time-stepping discretisations, since my work on collisions [2]. The idea is really powerful: since potentials of interest in mechanics are often non-negative, you can map them onto a ``quadratised'' function. In practice, you define

\[ \psi = \sqrt{2\phi} \]

and use \(\psi\) as an extra, indepedent state variable, to be solved for. Here's the magic: you are able to express update of this huge nonlinear system as a ... linear system! So, all you need really is one linear system solve at each time step. Existence and uniqueness are proven by simple inspection of the update matrix. Efficiency is obviously greatly improved. Here's what the time-stepping scheme looks like, from the article.

The following equations result:

\[ \left( \rho A \delta_{tt} - T_0 \mathbf{D}^2 + \left(1 + \eta (\mu_{t+} - 1) \right) EI \mathbf{D}^4 \right) \mathbf{u}^n = \mathbf{D}^+ \mathbf{G}_u^n \mu_{t+} + \mathbf{D}^+ \mathbf{G}_u^n \psi^{n - 1/2}, \]

\[ \left( \rho A \delta_{tt} + T_0 \Lambda \right) \mathbf{s}^n = \mathbf{Z}^{\top} \mathbf{D}^+ \mathbf{G}_v^n \mu_{t+} + \mathbf{Z}^{\top} \mathbf{D}^+ \mathbf{G}_v^n \psi^{n - 1/2}, \]

\[ \delta_{t+} \psi^{n - 1/2} = \mathbf{G}_u^n \left( \delta_{t.} \mathbf{D}^- \mathbf{u}^n \right) + \mathbf{G}_v^n \left( \delta_{t.} \mathbf{D}^- \mathbf{Z} \mathbf{s}^n \right). \]

Matrix Decomposition

The update equation for the geometrically exact nonlinear string is represented as:

\[ T = T_0 - T^n \]

where \( T_0 \) accounts for the linear part, and \( T^n \) accounts for the nonlinearity. Matrix \( T \) is symmetric and nonsingular, ensuring invertibility at every time step. The block matrix LU decomposition optimizes computation.

We have the equation:

\[ \mathbf{T} \begin{bmatrix} \mathbf{u}^{n+1} \\ \mathbf{s}^{n+1} \end{bmatrix} = \begin{bmatrix} \mathbf{b}_u^n \\ \mathbf{b}_s^n \end{bmatrix} , \]

where \(\mathbf{T} = \mathbf{T}_0 - \tilde{\mathbf{T}}^n\), and

\[ \mathbf{T}_0 = \begin{bmatrix} \frac{\rho A}{k^2}\mathbf{I} + \frac{\eta EI}{2} \mathbf{D}^4 & 0 \\ 0 & \frac{\rho A}{k^2}\mathbf{I} \end{bmatrix} \]

and

\[ \tilde{\mathbf{T}}^n = \frac{1}{4} \begin{bmatrix} \mathbf{D}^+ \mathbf{G}_u^n \mathbf{G}_u^n \mathbf{D}^- & \mathbf{D}^+ \mathbf{G}_u^n \mathbf{G}_v^n \mathbf{D}^- - \mathbf{Z} \\ \mathbf{Z}^{\top} \mathbf{D}^+ \mathbf{G}_u^n \mathbf{G}_v^n \mathbf{D}^- & \mathbf{Z}^{\top} \mathbf{D}^+ \mathbf{G}_v^n \mathbf{G}_v^n \mathbf{D}^- - \mathbf{Z} \end{bmatrix}. \]

In pratice, T is a block matrix, where the top left block is sparse, and is the largest (of size \(N \times N\)), and where the bottom right is full, and is the smallest (of size \(N_s \times N_s\)). Here \(N\) is the number of grid points for the transverse displacement, and \(N_s\) is the number of modal coordinates for the longitudinal component. Typically \(N_s \ll N \). In order to take advantadge of the sparsity of the upper left block, a block matrix LU decomposition can be employed. Details are given in the article. The proposed scheme, denoted LU_NIT, is compared to the two schemes given in [3]. The computational advantage of the current algorithm is pretty evident, especially compared to the fully-imlicit scheme.

Example Sounds

Here are example sounds. For sound synthesis, loss and source terms are added. The simulations are run using this Matlab script. The scheme is quite efficient: at audio rate, for a string with “average” parameters, compute time is less than 10x real-time in Matlab.

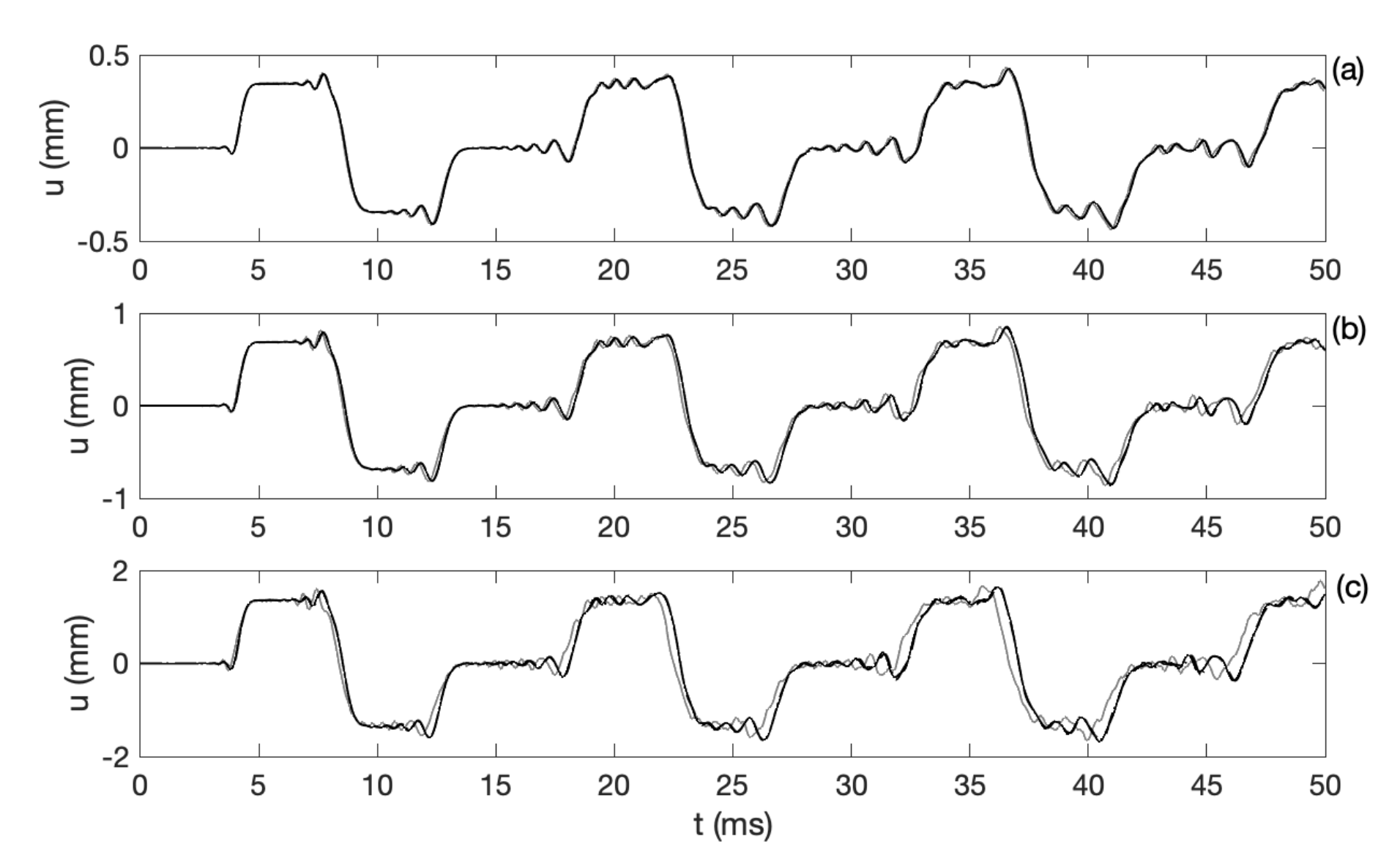

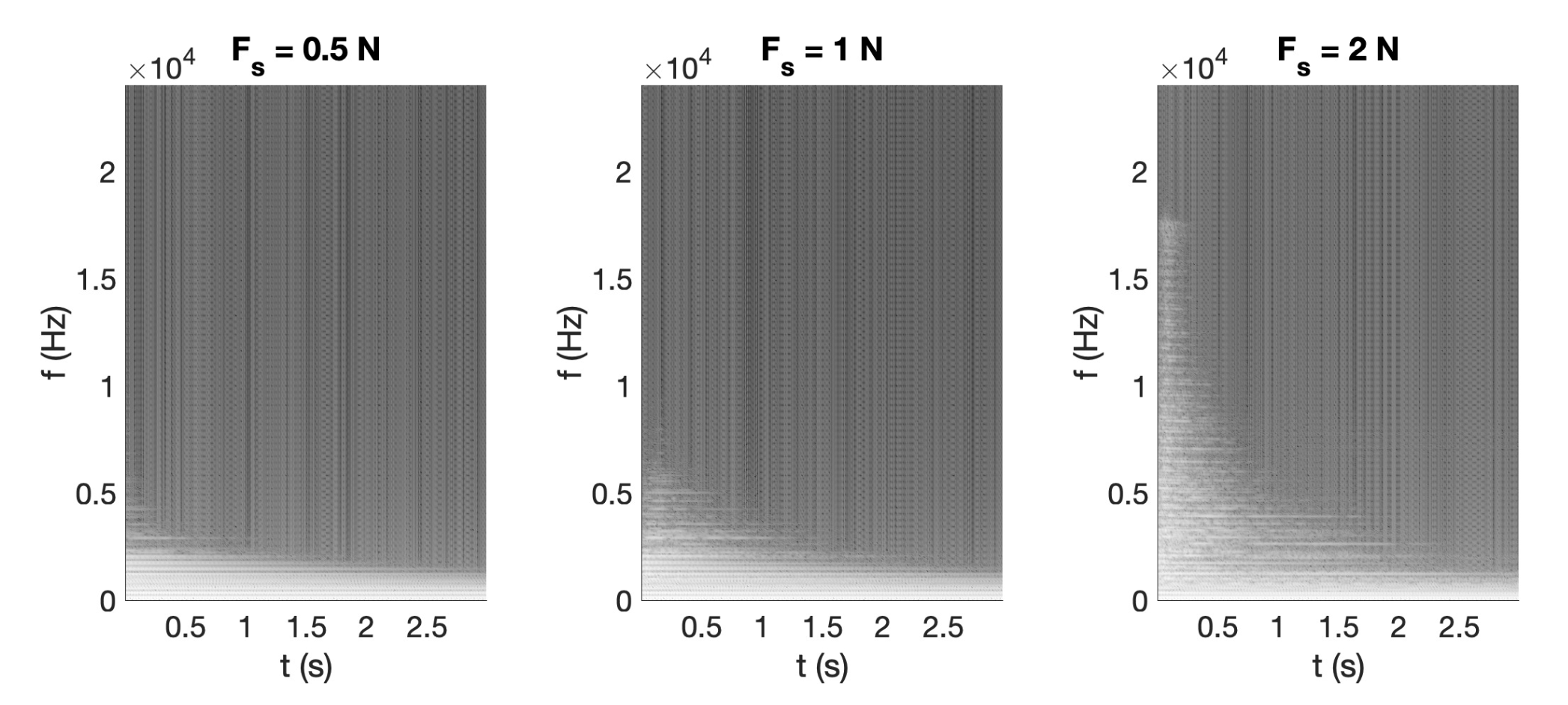

Here are some test cases. First, the string is plucked with a small force (0.5 Newtons), and the output of the scheme at audio rate (48 kHz) and 5X oversampling are compared. I used ρ = 8000, L = 1.0, T0 = 40, E = 2 · 1011, and r = 2.9 · 10-4 (SI units). The forcing acts at 0.72 L, and the forcing amplitudes are as indicated.

| 0.5 Newtons, 48 kHz | |

| 0.5 Newtons, 5X oversampling |

Then, the string is plucked with higher input forces. The examples below are all run at audio rate (48 kHz) and compared to oversampled solutions. The scheme captures most aspects of nonlinear string vibration, even at audio rate.

| F = 1.0 N, 48 kHz | |

| F = 1.0 N, 5X oversampling | |

| F = 2.0 N, 48 kHz | |

| F = 2.0 N, 5X oversampling |

Experimenting with the Physics

You can get interesting sounds using low-pitched strings. I used ρ = 12000, L = 1.5, T0 = 40, E = 2 · 1011, and a radius r = 2.9 · 10-4 (SI units). Force is applied using a raised cosine, with amplitude 2.5 N and contact duration 0.0008 seconds.

| T0 = 40 N, 48 kHz | |

| T0 = 20 N, 48 kHz |

And here’s the same string, but with different forcing parameters. You can really hear the phantom partials.

| tw = 0.002 s, F = 2.5 N, 48 kHz | |

| tw = 0.002 s, F = 0.5 N, 48 kHz |

Experimenting with unrealistic parameters yields interesting sounds. Here's a beam with ρ = 12000, L = 3.5, T0 = 20, E = 2 · 1011, and a radius r = 4.9 · 10-4. Forcing parameters are F = 4.5, tw = 0.002 seconds. Sound designers, take note!

| Long Beam, 48 kHz |

References

- J. Chabassier, P. Joly, Energy preserving schemes for nonlinear Hamiltonian systems of wave equations: Application to the vibrating piano string, Computer Methods in Applied Mechanics and Engineering, Vol. 199(45):2779–2795, 2010

- M. Ducceschi, S. Bilbao, S. Willemsen, S. Serafin, Linearly-implicit schemes for collisions in musical acoustics based on energy quadratisation, Journal of the Acoustical Society of America, Vol. 149(5):3502–3516, 2021

- M. Ducceschi, S. Bilbao. Non-iterative, conservative schemes for geometrically exact nonlinear string vibration, Proceedings of the International Congress on Acoustics (ICA2019), Aachen, Germany, 2019